December: Aerial Manipulator Integration & Simulation Validation

Work Carried Out

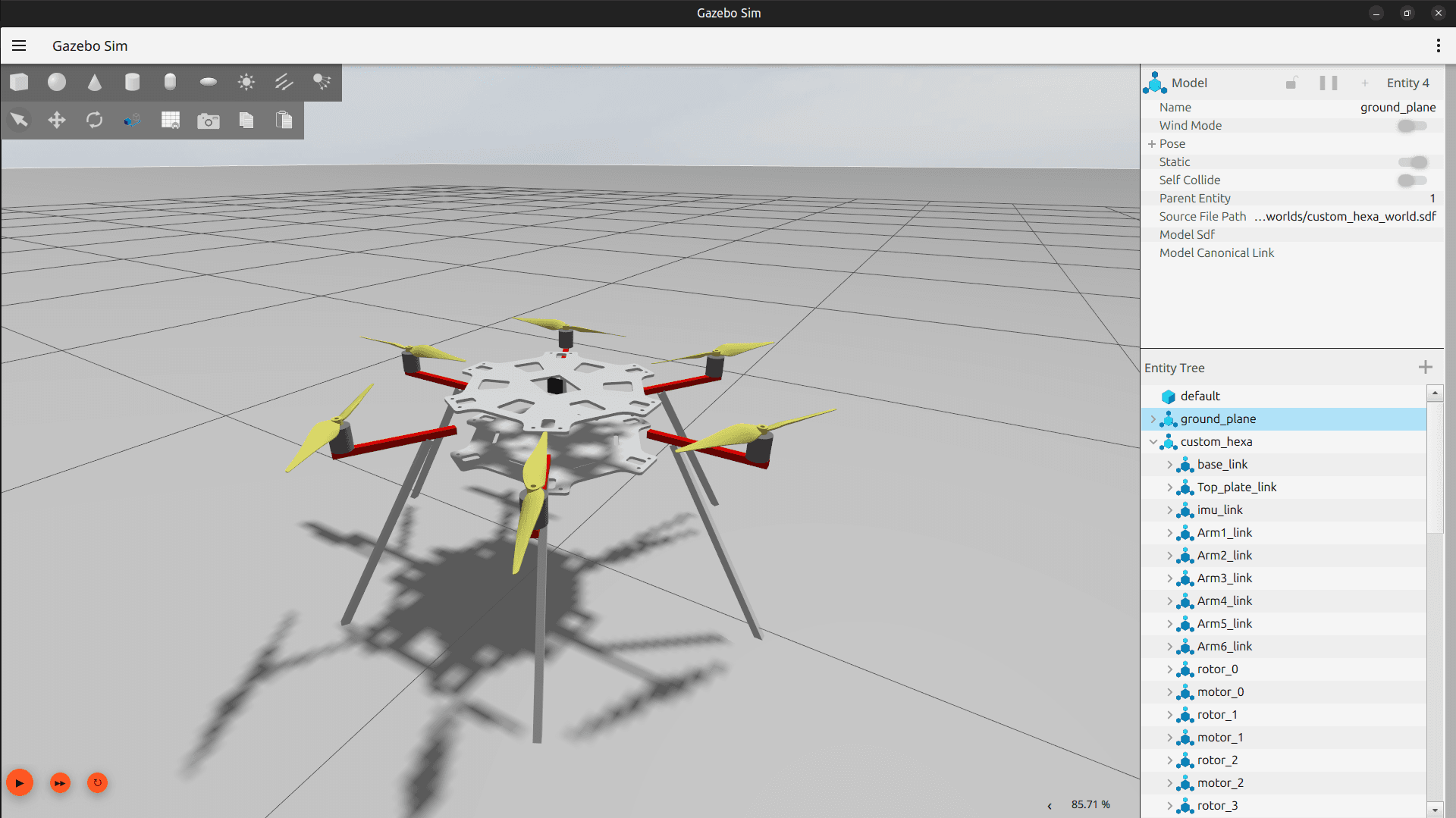

In December, I focused on validating and extending the hexacopter and aerial manipulator simulation. The hexacopter SDF model was validated through motor tests in Gazebo, where stable hover was achieved at approximately 459 rad/s per motor with the current mass and configuration, confirming that the simulation closely matches the real platform.

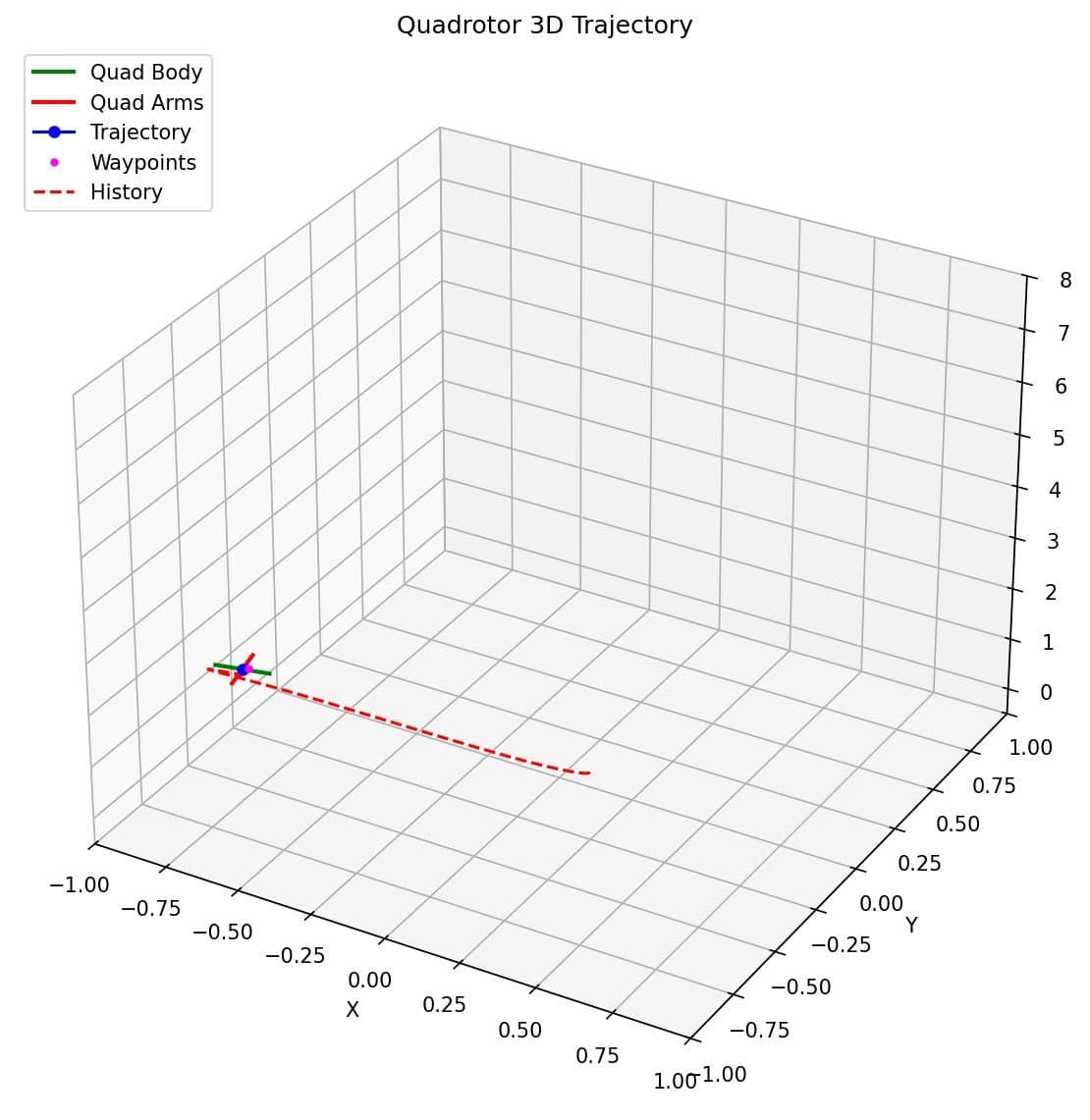

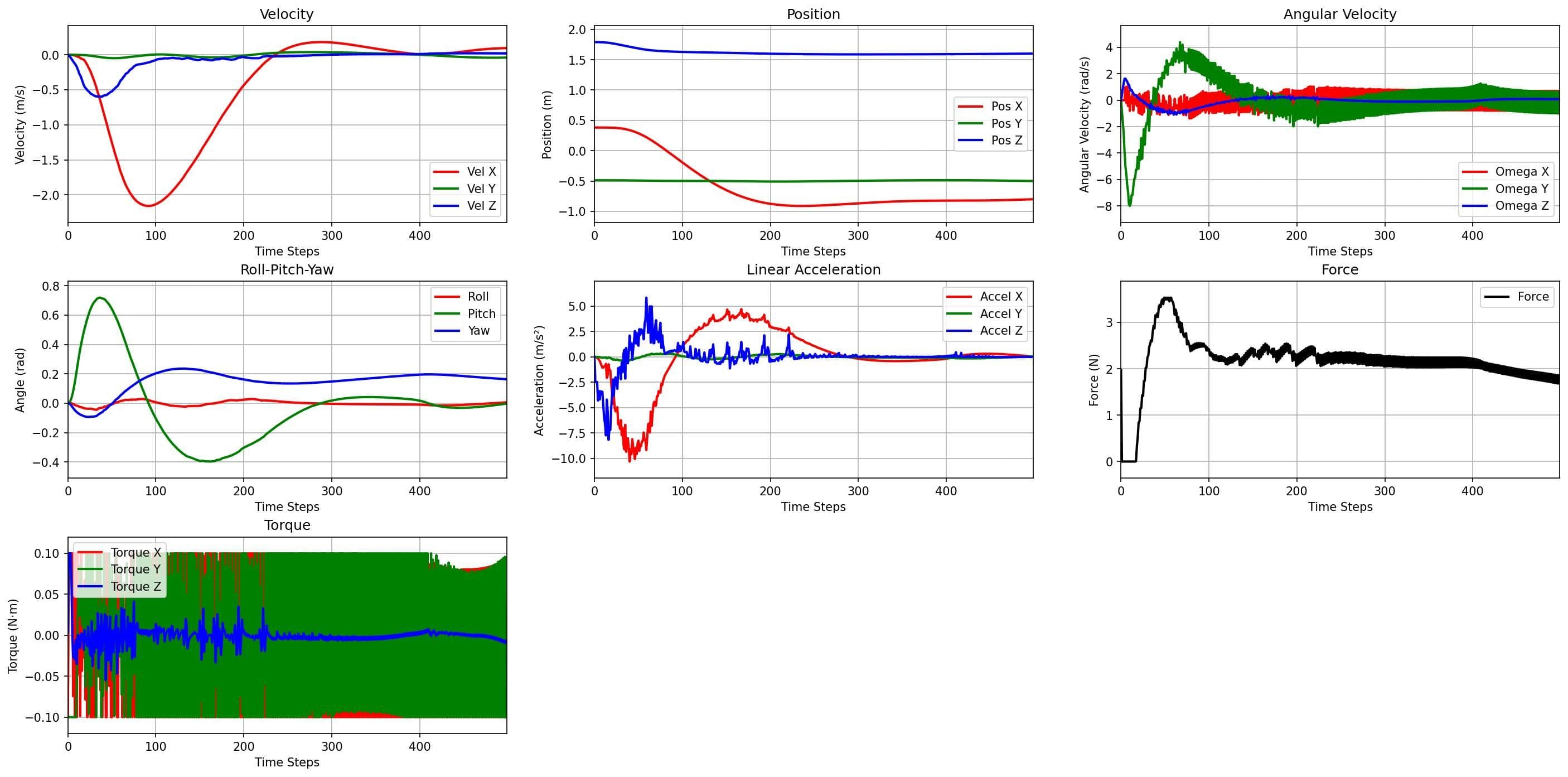

I then integrated the 3-DoF manipulator with the hexacopter in simulation and tested the combined aerial manipulator system. These tests revealed significant dynamic disturbances on the hexacopter caused by manipulator motion, highlighting important coupling effects that must be addressed in future control design.

In parallel, I worked on the planning and design considerations for a residual reinforcement learning controller. This included defining the action space, observation space, and reward structure, and outlining how learned residual actions would augment the baseline PX4 controller while preserving stability and supporting future sim-to-real transfer. No RL training or implementation was carried out at this stage.

I also contributed to the mid-review presentation and report documentation, summarizing simulation results, system integration progress, and planned control developments.

Problems Encountered

A key issue arose from the model spawning mechanism. The hexacopter was spawned through PX4 SITL, while the manipulator was spawned separately by Gazebo, leading to difficulties when attaching the manipulator at the world level and resulting in incorrect model linkage and dynamics.

Solutions / How Issues Were Addressed

This was resolved by integrating the manipulator directly into the hexacopter SDF model, ensuring that both the hexacopter and manipulator were spawned together under PX4 SITL. This provided correct kinematic and dynamic coupling and enabled reliable combined-system simulation and testing.