Navigating the Agentic AI Jungle: A Guide to Choosing Your Framework

In my last post, we explored the Zoo of Reinforcement Learning algorithms. Today, we are stepping into a new, rapidly expanding jungle: Agentic AI.

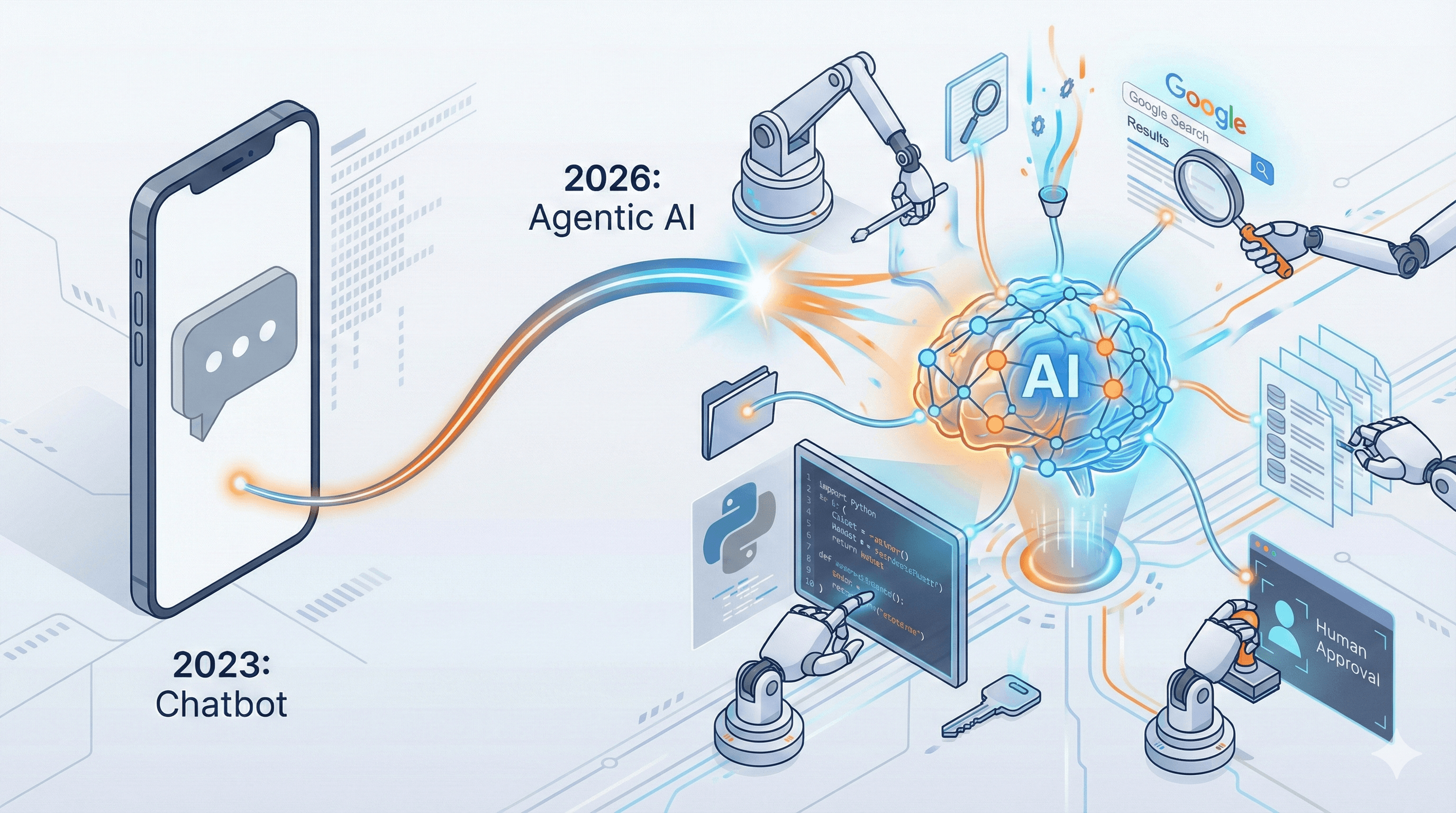

If you have been following the AI space recently, you have likely heard the buzz. We are moving past AI that just chats, like a standard ChatGPT session, to AI that acts. This is Agentic AI: systems that can reason, plan, and execute tasks to achieve a goal on their own.

But just like with RL, there is not one perfect tool for building these agents. There is a whole ecosystem of frameworks, each with its own philosophy. Let’s break down what Agentic AI is, look at the top frameworks, and help you decide which one to use for your next project.

What Exactly is an Agent?

Before we look at the tools, we need to understand the mechanics. A standard Large Language Model (LLM) is a passive engine. It relies entirely on its training data, which often leads to hallucinations when it doesn't know an answer.

An Agent is different because it has access to Tools. Instead of guessing, it can look things up. Most agents follow a logic pattern called ReAct (Reason + Act):

- Thought: The agent looks at the user goal and thinks about what to do first.

- Action: It chooses a specific tool, such as a Google Search or a Python Script.

- Observation: It reads the actual result of that action.

- Repeat: It loops through this process until the goal is met.

To make this work in a real-world application, you need a framework to manage these loops, handle errors, and keep track of memory.

The Elephant in the Room: LangChain versus LangGraph

You cannot talk about agents without mentioning LangChain. It was the first framework to make AI actionable and remains the most popular ecosystem in the world. However, if you look at the industry today, you will notice a major shift in how it is used.

For simple prototypes, the standard AgentExecutor inside LangChain is still widely used. It works great when you need a linear "Think -> Act" loop. But as soon as you want complex behavior like looping back to a previous step multiple times or waiting for human approval the standard executor becomes difficult to manage.

The 2026 Reality: Today, developers largely split their architecture:

- LangChain is the foundation layer. It provides the building blocks, like connectors for tools and prompt templates.

- LangGraph is the specialized orchestration layer built on top of it. It was created because real-world production agents need cycles, not just straight lines.

The Analogy: LangChain is the box of auto parts. LangGraph is the blueprint that tells those parts how to assemble into a working engine.

The Frameworks: Meet the Zoo

1. LangGraph: The State Machine Architect

LangGraph treats your agent like a flowchart, or more accurately, a state machine. It is the evolution of the LangChain ecosystem designed for production.

- How it works: You define nodes (actions) and edges (the paths between them). You have total control over the flow.

- Best for: Engineers who need 100% predictability and "Human-in-the-loop" features. If your agent needs to pause and ask a human manager for approval before sending an email, this is the only serious choice.

- Pros: Extremely robust and industry-standard for complex, cyclic workflows.

- Cons: It has a steep learning curve and requires more code to get started than a simple

AgentExecutor.

2. CrewAI: The Orchestrator of Teams

CrewAI takes a high-level, human-centric approach. Instead of thinking about code nodes, you think about Roles. You might create a "Researcher" agent and a "Writer" agent.

- How it works: You define a "Crew" of agents with goals and backstories. They talk to each other to solve the problem, much like a human project team.

- Best for: Rapid prototyping and business automation tasks where roles are clearly defined.

- Pros: Very intuitive and fast to set up. It handles delegation between agents automatically.

- Cons: It can be harder to debug if the agents get stuck in a "conversation loop" of complimenting each other without making progress.

3. Microsoft AutoGen: The Conversational Powerhouse

AutoGen is built around the idea that agents solve problems best when they talk to each other.

- How it works: Multi-agent conversation. You might have one agent write code and another agent run it. If the code fails, the second agent tells the first one to fix it.

- Best for: Coding tasks, heavy logic problems, and research-heavy workflows.

- Pros: Unmatched for self-correcting code and iterative problem-solving.

- Cons: It can get expensive in terms of token usage because agents "talk" a lot to reach a conclusion.

4. LlamaIndex: The Librarian (RAG Specialist)

While other frameworks focus on the "Brain," LlamaIndex focuses on the Memory. It is the undisputed king of Retrieval-Augmented Generation (RAG).

- How it works: It breaks your data into "chunks," creates an index map (Vector Store or Knowledge Graph), and allows agents to search it with high precision.

- Best for: Agents that need to read, search, and synthesize thousands of documents.

- Pros: Best-in-class for RAG. Through LlamaHub, it has hundreds of connectors to almost every data source imaginable.

- The "Secret" Power: LlamaIndex plays nicely with others. You can build a LangGraph agent that uses a LlamaIndex engine as a tool. It doesn't have to be one or the other.

- Cons: While it has its own agent workflows, it is generally less flexible for general-purpose control tasks compared to LangGraph.

The Verdict: Which One Should You Choose?

The answer depends on your specific environment.

- Are you building a professional application? Use LangGraph. It gives you the control you need to ensure the agent follows your business rules (and waits for your approval) every single time.

- Do you want to automate a team-based process quickly? Use CrewAI. It is the fastest way to see the magic of multi-agent systems in action.

- Are you building a tool to write or debug software? Use AutoGen. Its ability to run code and self-correct is currently the industry standard for dev tools.

- Is your agent essentially a super-librarian? Use LlamaIndex. It is built from the ground up to handle massive knowledge bases, and can easily plug into the other frameworks.

Final Thoughts

We are still in the early days of Agentic AI. We are shifting from "Prompt Engineering" to "Agent Engineering." The goal is no longer just to write a better prompt, but to build a better system.

My advice? Pick a boring task in your daily life and try to build an agent to solve it. The best way to master the zoo is to step inside the cage.

References & Further Reading

If you want to start building, here are the official docs for the tools mentioned above:

- LangGraph Documentation The official guide to building stateful agents with LangChain. https://langchain-ai.github.io/langgraph/

- CrewAI Official Docs Guides on setting up Crews, Agents, and Tasks. https://docs.crewai.com/

- Microsoft AutoGen The framework for enabling next-gen LLM applications via multi-agent conversation. https://microsoft.github.io/autogen/

- LlamaIndex Documentation The central hub for all things RAG and data ingestion. https://docs.llamaindex.ai/

- The ReAct Paper Synergizing Reasoning and Acting in Language Models (Yao et al., 2022) The foundational paper that introduced the "Thought-Action-Observation" loop used by most agents today. https://arxiv.org/abs/2210.03629

Note: AI tools were used to assist with writing and editing.