Mastering the Algorithm Zoo: A Guide to Choosing RL for Control Tasks

In the world of Reinforcement Learning (RL), we often talk about the "Algorithm Zoo." It is a crowded space filled with acronyms like PPO, SAC, and TD3. If you are a control engineer or a developer trying to teach a machine to perform a task, picking the right tool from this zoo is your first and most important step.

Choosing the wrong algorithm doesn't just mean slow progress; it often means the system won't learn at all. This guide will help you navigate these choices using simple, professional logic.

Understanding Your "Space"

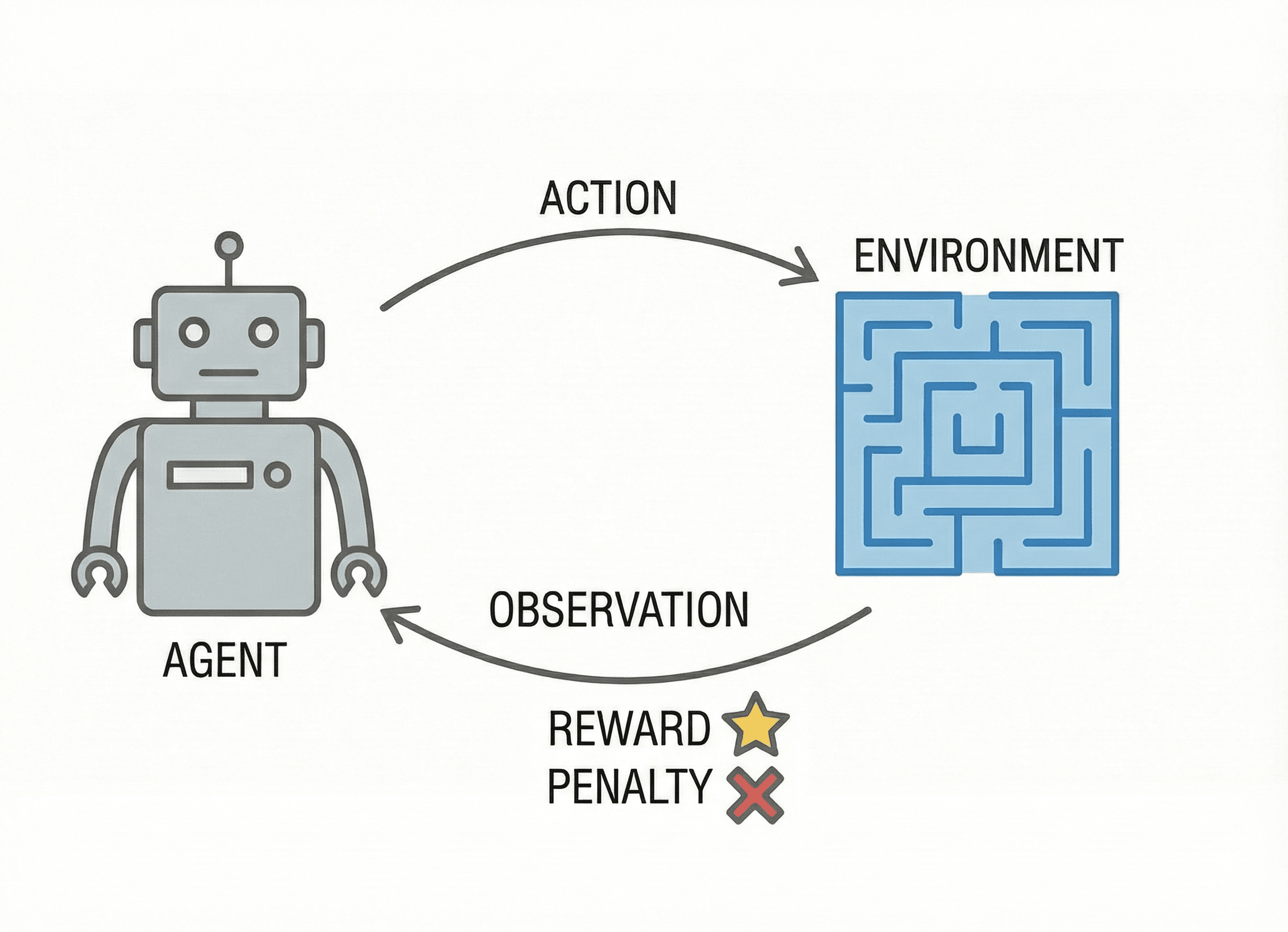

Before picking an algorithm, you must define the environment your agent lives in. This is split into two halves: the State Space (what the agent sees) and the Action Space (what the agent does).

1. The State Space (Inputs)

In almost all real-world control tasks, the state space is Continuous. This means your sensors provide real numbers, like a temperature of 22.5°C or a velocity of 5.4 m/s. Because there are infinite possible values, you cannot use a simple "lookup table." You must use Deep Reinforcement Learning, where a neural network "guesses" the best move based on similar past experiences.

2. The Action Space (Outputs)

This is where the big decision happens.

- Discrete Actions: The agent chooses from a list (for example: Move Left, Move Right, Stop).

- Continuous Actions: The agent controls a "dial" (for example: apply 14.2% motor power).

Note: While you can turn a continuous dial into a few discrete steps, you lose the smoothness required for high-precision control.

The Top Three Contenders

While there are dozens of algorithms, three have become the industry standards for control tasks due to their reliability and performance.

PPO (Proximal Policy Optimization)

- The Vibe: The Reliable Workhorse.

- Best For: Fast simulators where you can collect millions of data points quickly.

- Why it works: PPO is an "On-Policy" algorithm. It learns only from the data it just collected. It is famous for its stability; it uses a mathematical "clip" to ensure the agent doesn't make a massive change to its behavior that ruins its training.

SAC (Soft Actor-Critic)

- The Vibe: The Efficiency King.

- Best For: Real-world robots or slow simulations where every second of data is expensive.

- Why it works: SAC is "Off-Policy," meaning it reuses old data from a memory buffer. What makes it special is Entropy. It rewards the agent for being "random" early on, which forces it to explore every possible solution rather than getting stuck on the first mediocre solution it finds.

TD3 (Twin Delayed DDPG)

- The Vibe: The Precise Specialist.

- Best For: Complex continuous control where SAC might be too computationally heavy.

- Why it works: TD3 is built to fix "overestimation bias," which is the tendency for agents to think an action is much better than it actually is. By using two "critics" to check each other's work, it provides very stable, smooth control.

The Decision Logic: Which One Should You Use?

Step 1: Check your Action Space. If your actions are purely Discrete (like a light switch), start with DQN or PPO. If your actions are Continuous (like a steering wheel), move to Step 2.

Step 2: Assess your Data Budget. If you are using a high-speed simulator where you can generate data very cheaply, start with PPO. It is easier to set up and less likely to break during training.

Step 3: Handle the Real World. If you are training on a physical robot where time is money, you cannot afford to waste data. In this case, use SAC. Its ability to learn from past experiences makes it much faster at reaching a solution with less data.

Professional Tips for Success

Even with the right algorithm, control tasks can be tricky. Always remember:

- Normalize your States: If one sensor reads 0 to 1,000 and another reads 0 to 1, the neural network will struggle. Scale all inputs to a similar range.

- Start Simple: Don't try to solve the hardest version of the problem first. Use a simple reward function before adding complexity.

References

For those looking to dive into the peer-reviewed math behind these tools, these are the essential sources:

- Foundational Text: Sutton, R. S., & Barto, A. G. (2018). Reinforcement Learning: An Introduction. 2nd Edition. MIT Press.

- On SAC: Haarnoja, T., et al. (2018). "Soft Actor-Critic: Off-Policy Maximum Entropy Deep Reinforcement Learning with a Stochastic Actor." Proceedings of the 35th International Conference on Machine Learning (ICML 2018).

- On TD3: Fujimoto, S., et al. (2018). "Addressing Function Approximation Error in Actor-Critic Methods." Proceedings of the 35th International Conference on Machine Learning (ICML 2018).

- On PPO: Schulman, J., et al. (2017). "Proximal Policy Optimization Algorithms." Technical Report, OpenAI.

- The Control Bridge: Bertsekas, D. P. (2019). Reinforcement Learning and Optimal Control. Athena Scientific.

Note: AI tools were used to assist with writing and editing.